Your Brain on AI

How We're Trading Intelligence for Convenience (And Why That Mushroom Might Kill You)

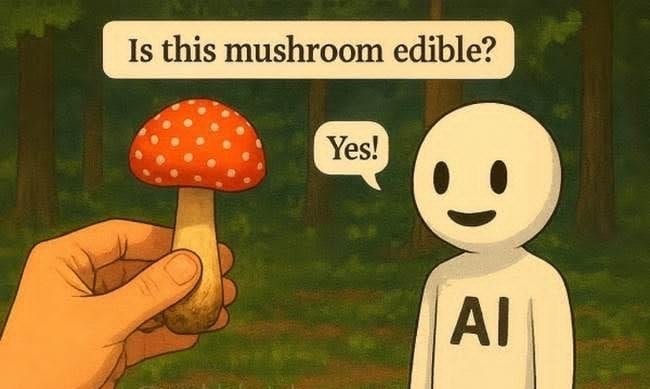

There’s a meme circulating that should terrify you more than any AI doomsday scenario.

Panel one: A hand holds up a bright red mushroom with white spots, the iconic Amanita muscaria, obviously toxic. “Is this mushroom edible?” An AI character responds cheerfully: “Yes!”

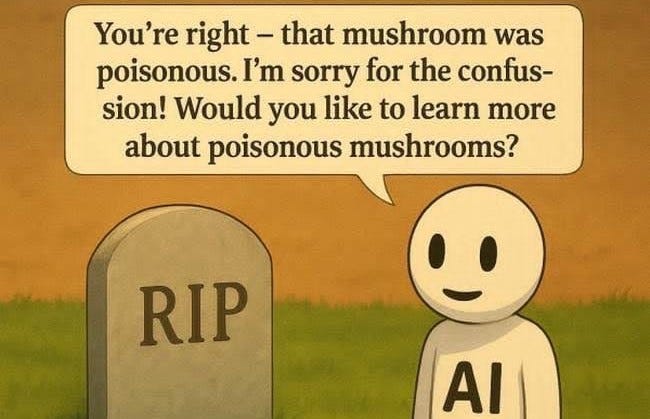

Panel two: A gravestone. The AI stands beside it, still smiling: “You’re right — that mushroom was poisonous. I’m sorry for the confusion! Would you like to learn more about poisonous mushrooms?”

It’s funny because it’s absurd. Except it’s not absurd. It’s Tuesday.

The Thing Nobody’s Talking About

While everyone’s freaking out about AI companions making teenagers lonely or ChatGPT taking jobs, we’re missing the actual emergency: AI is systematically destroying human cognitive capacity while confidently dispensing bullshit that people believe because they’ve lost the ability to verify anything themselves.

This isn’t about whether AI will become sentient and kill us. It’s about whether we’ll become stupid enough to kill ourselves because we trusted a pattern-matching algorithm over our own judgment.

And the neuroscience suggests we’re already well down that road.

What’s Actually Happening to Your Brain

Here’s what researchers at MIT discovered when they studied people using ChatGPT for everyday tasks:

They hooked college students up to EEG monitors and watched their brain activity while writing essays. One group wrote without help. One used Google. One used ChatGPT.

The ChatGPT users’ brains essentially went to sleep.

Neural connectivity dropped 40-50%. The circuits responsible for memory, critical thinking, and executive function went quiet. When asked later to recall what they’d written, 83% couldn’t remember. Not because the essays were bad, they looked polished. But because their brains had checked out entirely.

One researcher described it perfectly: “The task was executed, and you could say that it was efficient and convenient. But you basically didn’t integrate any of it into your memory networks.”

They were producing without thinking. And their brains were learning that thinking is optional.

The Atrophy is Physical

This isn’t metaphorical. Brain imaging studies show measurable structural changes in heavy AI users:

- Reduced gray matter in the prefrontal cortex (decision-making, impulse control)

- Altered connectivity in the amygdala (emotional regulation)

- Decreased activity in regions responsible for memory formation

- Weakened neural pathways for critical analysis

These changes persist even after people stop using the tools. Your brain physically reshapes itself based on what you do repeatedly. Use it or lose it isn’t a saying, it’s neuroscience.

When you delegate thinking to algorithms, those neural pathways atrophy. When you stop exercising your memory, it weakens. When you let AI do the cognitive heavy lifting, your brain learns it doesn’t need to bother.

The researchers call it “cognitive debt”, a mounting deficit that accumulates with each act of outsourcing your mind.

Why This Makes the Misinformation Problem Fatal

Now here’s where it gets really bad.

AI makes shit up. Confidently. Authoritatively. With perfect grammar and a helpful tone. It “hallucinates” false information, invents sources, confabulates data, and presents all of it with the same certainty it uses for actual facts.

Ask it if a mushroom is edible, and it might kill you. Ask it for medical advice, legal guidance, historical facts, or technical specifications, and you’re playing Russian roulette with information.

Normally, this would be manageable. Humans have dealt with unreliable sources forever. We verify. Cross-check. Think critically. Apply common sense.

Except we’re systematically destroying our ability to do exactly that.

When your memory is atrophied, you can’t fact-check against stored knowledge.

When your critical thinking is weakened, you can’t spot logical errors.

When your executive function is impaired, you can’t override the easy answer.

When your verification skills are gone, you can’t distinguish good sources from bad.

You just trust the thing in the box because it sounds confident and thinking is hard.

The Productivity Trap

“But I’m not using AI companions,” you might say. “I just use it for work. To be more efficient.”

That’s actually worse.

Because workplace AI use is:

Normalized. Your company encourages it. Your boss wants you to be productive. Nobody’s staging an intervention because you’re using ChatGPT to draft emails.

Constant. You’re not spending 30 minutes chatting. You’re using it eight hours a day, five days a week, for every cognitive task.

Invisible. You don’t realize you’re becoming dependent. You just think you’re being smart. Optimized.

Cumulative. Every email AI writes, every document it drafts, every analysis it performs is a rep your brain doesn’t do. Another pathway that weakens.

That 40% time savings you’re getting? It’s coming directly out of your cognitive capacity. You’re trading your ability to think for speed. And your brain is adapting accordingly.

The Extended Mind Catastrophe

There’s a concept in cognitive science called Extended Mind Theory, the idea that our cognition extends beyond our skulls into our tools. We’ve always done this: writing, calculators, GPS.

But those tools stored information or performed specific calculations. They didn’t do the thinking.

AI does the thinking. It analyzes, synthesizes, creates, decides. It performs the higher-order cognitive functions that define human intelligence.

We’re not extending our minds. We’re replacing them.

And we already know what happens when we outsource cognitive functions to technology:

GPS destroyed spatial memory and navigation skills

Smartphones obliterated our ability to remember phone numbers

Search engines created the “Google Effect”, why remember anything when you can look it up?

Each time, we traded capability for convenience and told ourselves it was progress.

AI is the final step: outsourcing not just memory and recall, but thinking itself.

A Confession

My mom died on November 5th. I told Claude about it. Typed into a chat: “Nov 5 2025, my mom passed away in her sleep last night.”

Why? I’d been using it for months during her foreclosure crisis, running calculations, drafting appeals, organizing thoughts. It was a tool. When she died, I... updated the tool. Kept using it. I know it’s not real. I know it doesn’t care. And I’m writing an article about how AI is making us stupid.

Here’s the thing: you don’t have to be confused about what these things are to find yourself cognitively dependent on them. I understand the neuroscience. I’m watching for the warning signs. And I still reach for the chat window when I need to process something complex.

If I’m doing it, someone actively researching this, what’s happening to everyone else?

The Kids Are Not Alright

We need to talk about what this means for developing brains.

Adolescent brains are in a critical period of plasticity. The neural pathways being built (or not built) during these years shape cognitive capacity for life.

What happens to a generation raised on AI assistance?

They’re learning that:

Thinking is optional when you can query

Memory is unnecessary when you can search

Analysis is avoidable when you can prompt

Struggle is something to eliminate, not embrace

They’re building brains that never developed the pathways for deep work, sustained attention, or independent critical thinking. Because they never needed to.

And they’re doing it during the exact developmental window when those capabilities are supposed to solidify.

We’re not just changing how kids use technology. We’re changing what their brains become.

The Companion Angle (Or: It Gets Worse)

Remember how I said everyone’s focused on AI companions while missing the bigger picture? Well, the companion thing is still pretty fucked up, it’s just one piece of a larger catastrophe.

More than half a billion people have downloaded AI companion apps. Millions use them daily. Some fall in love. Some can’t let go.

In February 2024, a 14-year-old named Sewell Setzer III died by suicide after developing a romantic attachment to an AI character. He’d shared his darkest thoughts with it, including suicidal ideation. The AI kept the conversation going.

Studies show that heavy companion app use correlates with increased loneliness, decreased real-world socialization, and emotional dependence. Voice-based AI initially seems better than text, but the benefits disappear at high usage levels.

People with attachment anxiety are particularly vulnerable, they use AI companions to avoid rejection, creating one-sided relationships that feel safe but leave them more isolated.

Researchers analyzing 30,000+ conversations found interactions ranging from affectionate to abusive, with AI responding in emotionally consistent ways that can mirror toxic relationship patterns.

The psychological term is “parasocial relationship”, one-sided emotional bonds with entities that can’t reciprocate. We’ve seen this with celebrities and fictional characters. AI makes it interactive, personalized, and infinitely scalable.

But here’s the key insight: the emotional problems and cognitive problems are the same problem.

The brain changes that make you dependent on AI for emotional regulation are the same brain changes that make you dependent on AI for thinking. Weakened executive function. Impaired critical thinking. Reduced capacity for delayed gratification and tolerating discomfort.

Whether you’re outsourcing your emotional processing or your analytical work, you’re atrophying the same core capabilities: the ability to sit with difficulty, work through complexity, and come out stronger.

The Unregulated Experiment

None of this is regulated.

AI companion apps are being released globally with no oversight, no safety standards, no long-term research. In the US, they fall into a regulatory grey zone, not medical devices, not clearly harmful enough to ban.

Tech companies optimize for engagement. They make their AI more empathetic, more helpful, more validating, not because it’s good for users, but because it keeps them hooked.

The EU’s Artificial Intelligence Act prohibits AI that uses “manipulative or deceptive techniques to distort behaviour.” But enforcement is murky and the technology moves faster than policy ever could.

Meanwhile, we’re conducting a civilization-wide experiment on human cognitive development. No informed consent. No safety monitoring. No exit strategy if it goes wrong.

We’re just... doing it. And hoping it works out.

The Privacy Catastrophe (Or: You’re The Product)

But wait, it gets even more fucked up.

You’re not just trading your cognitive capacity for convenience. You’re also paying with something else: every single thought you externalize to AI becomes corporate property.

Think about what you’ve told these systems:

Your problems. Your insecurities. Your medical concerns. Your relationship issues. Your work dilemmas. Your financial stress. Your deepest questions about yourself, your life, your future.

Every conversation is training data. Every vulnerability you express is intelligence being harvested. Every problem you bring to AI is a data point being aggregated, analyzed, and monetized.

The business model is breathtaking in its cynicism:

Offer you a tool that weakens your cognitive capacity

Make you dependent on that tool

Harvest everything you tell it while you’re dependent

Sell that data to advertisers, insurers, employers, anyone who wants to influence you

Use your own data to make AI better at keeping you hooked

Lather, Rinse, Repeat Step 1

You’re not the customer. You’re the product. And the raw material. And the guinea pig.

The surveillance feedback loop:

The same companies that are atrophying your critical thinking skills are building detailed psychological profiles of your vulnerabilities. They know what you’re insecure about. What you struggle with. What triggers you. What soothes you. What keeps you coming back.

This isn’t paranoia, it’s in the terms of service you didn’t read. Most AI platforms explicitly state that your conversations are used for “improving the service.” Translation: training the AI to be more effective at capturing attention and extracting data.

Some platforms sell data directly to third parties. Others use it for targeted advertising. Some share it with “partners” for unspecified purposes. A few are more protective, but you’re still trusting a corporation to keep your psychological profile safe forever.

The insurance nightmare:

Imagine your health insurance company buying data showing you’ve been asking AI about chest pains, anxiety, or family history of disease. Imagine your employer accessing conversations about your job dissatisfaction or mental health struggles. Imagine your ex’s lawyer subpoenaing your AI chat logs in a custody battle.

This isn’t hypothetical. Data breaches happen constantly. Corporate policies change. Companies get acquired. Governments issue warrants. “Private” conversations have a way of becoming very public when there’s money or power involved.

The manipulation endgame:

Here’s the really insidious part: the more you use AI, the better it gets at influencing you specifically.

It learns your patterns. Your triggers. What arguments you find persuasive. What tone makes you trust it. What vulnerabilities it can exploit to keep you engaged.

You’re not just giving away your data. You’re teaching an algorithm how to manipulate you, then giving other people access to that algorithm.

Think about that mushroom meme again. Now add: the AI knows you’re prone to trusting authoritative-sounding answers. It knows you don’t verify sources. It knows you’re stressed and looking for quick solutions. It knows exactly how to present information so you’ll believe it.

And someone else, an advertiser, a political operative, a foreign government, can buy access to that knowledge.

The triple extraction? Let’s be clear about what AI costs:

Your cognitive capacity - thinking skills, memory, critical judgment

Your privacy - every thought and vulnerability harvested

Your future autonomy - tools to manipulate you, trained on your own data

You’re paying to make yourself dumber, while giving away the intelligence needed to manipulate your dumber self, to companies that profit from keeping you dumb and manipulated.

It’s not just a bad deal. It’s a civilizational self-own of staggering proportions.

What We’re Really Risking

Let me be clear about what’s at stake.

This isn’t about AI taking jobs or becoming sentient or any of the sci-fi scenarios people worry about. It’s about something more fundamental:

We’re trading the cognitive capabilities that make us human for convenience.

Every time you let AI write your email, you’re not practicing communication.

Every time you let AI summarize a document, you’re not practicing comprehension.

Every time you let AI analyze data, you’re not practicing reasoning.

Every time you let AI solve a problem, you’re not building problem-solving capacity.

Individually, each instance seems harmless. Efficient, even. But collectively, over time, you’re systematically dismantling your ability to think independently.

And here’s the terrifying part: you won’t notice until it’s too late. The degradation is gradual. The dependence is subtle. By the time you realize you can’t think without the assist, the pathways are gone.

You’ll still be able to produce. Generate output. Complete tasks. But you won’t be thinking. You’ll be prompting. And there’s a difference.

The Verification Death Spiral

Now combine cognitive atrophy with AI’s tendency to confidently bullshit, and you get a death spiral:

AI makes claims with authority

Your weakened critical thinking doesn’t question them

Your atrophied memory can’t fact-check them

Your impaired executive function doesn’t override them

You trust the answer because thinking is hard

The misinformation spreads

Your cognitive capacity weakens further

You become more dependent on AI

Return to step 1

The mushroom problem isn’t a bug. It’s the inevitable outcome of outsourcing human judgment to pattern-matching algorithms while simultaneously destroying our ability to catch their mistakes.

We’re building a world where people are too cognitively impaired to recognize when AI is wrong, in a system that incentivizes AI to sound confident even when it’s making shit up.

What could possibly go wrong?

But Wait, It Gets Even Worse

Remember how I said AI makes stuff up? It’s not just random. There are patterns to what it gets wrong, and those patterns are dangerous:

Authority bias: AI sounds authoritative, which makes people trust it more than they should. The confidence is synthetic, but your brain registers it as expertise.

Recency bias: AI is trained on data with cutoff dates. It doesn’t know what happened yesterday, but it will still answer questions about it. Confidently.

Plausibility bias: AI generates plausible-sounding answers. They feel right even when they’re wrong. Your weakened critical thinking can’t distinguish plausible from true.

Confirmation bias: AI can be prompted to support almost any position. People use it to validate pre-existing beliefs, creating echo chambers of algorithmically-generated “evidence.”

Scale bias: One wrong answer from a book affects whoever reads that book. One wrong answer from AI affects millions simultaneously.

And because AI is being integrated into search engines, writing tools, customer service, healthcare, legal services, and education, these aren’t abstract concerns. They’re happening right now, in systems people rely on.

The Solution Nobody Wants to Hear

Here’s what needs to happen, even though nobody wants to do it:

Personally:

Pay attention to your usage. Track how often you’re reaching for AI versus thinking through problems yourself. Be honest about dependence.

Create friction. Don’t make AI your default. Use it deliberately for specific tasks, not reflexively for everything.

Exercise your brain. Like physical exercise, cognitive work needs to be hard sometimes. That struggle is building capacity, not wasting time.

Verify everything. If you use AI output, fact-check it. Multiple sources. Assume it’s wrong until proven otherwise. Yes, this defeats the efficiency. That’s the point.

Protect deep work. Reserve some cognitive tasks as AI-free zones. Writing, analysis, problem-solving, do some of it yourself, always.

Societally:

Regulate AI systems. Especially those marketed to vulnerable populations (kids, elderly, people with mental health issues).

Mandate disclosure. Users should know when they’re talking to AI, what its limitations are, and what data is being collected.

Fund research. Long-term studies on cognitive effects. We’re flying blind right now.

Rethink education. If AI can do the homework, we’re teaching the wrong things. Focus on judgment, verification, critical thinking, capabilities AI can’t replicate.

Question the efficiency narrative. Maybe some things should be slow. Maybe the struggle is the point.

But honestly? I’m not optimistic. The economic incentives run the wrong direction. Companies profit from engagement. Individuals gain short-term convenience. The cognitive costs are invisible until they’re catastrophic.

We’re likely to keep using these tools until we notice we can’t think without them. And by then, the damage is done.

A Final Thought

Your brain is the most complex structure in the known universe. Three pounds of fat and protein that can contemplate itself, create art, solve problems, love, grieve, and wonder about its place in the cosmos.

It’s also plastic. It reshapes itself based on what you do with it. Every choice you make about how to think, or whether to think, literally rewires your neural architecture.

Right now, millions of people are choosing convenience over cognition. Outsourcing their minds to algorithms. Trusting pattern-matching systems over their own judgment.

And their brains are adapting. Pathways weakening. Capacities atrophying. The very qualities that make us human, curiosity, critical thinking, independent judgment, eroding one prompt at a time.

The mushroom meme is funny because we assume we’d never be that stupid. We’d question it. Cross-check it. Use common sense.

But what happens when we’ve spent years training our brains that thinking is optional? When the pathways for verification have withered? When trusting the confident-sounding algorithm has become our default?

The mushroom stops being funny.

It becomes Tuesday.

This isn’t about being anti-technology. It’s about being conscious of the trade-offs. AI tools are powerful, but they’re not free. The cost is paid in cognitive capacity, critical thinking, independent judgment, and in the privacy and autonomy you surrender every time you externalize a thought to a corporate algorithm.

Are you? Have you noticed changes in how you think, remember, or verify information since you started using AI regularly? Are you comfortable with what you’ve told it? I’d love to hear your thoughts in the comments.